Introduction of Bernoulli and Binomial

The Bernoulli Distribution is a discrete probabilistic distribution used to describe the outcomes for one event with two possible outcomes, typically known as “success” and “failure”. It assigns a probability for both events based on these events: one represents success while two fail. These probabilities are represented as “p” for successful outcomes and “q” for failure outcomes respectively.

Flipping a coin, for instance, is a form of Bernoulli testing wherein “p” represents the probability that heads are picked and “q” represents tails are picked; each coin flipped has two possible outcomes (heads or tails) so either “p” or Q should apply respectively.

The Bernoulli Distribution is an effective yet straightforward tool that can model numerous phenomena. For example, it can predict whether or not a coin lands head-first after repeated flips, or predict the odds that a given number of trials are successful.

The Bernoulli Distribution is an example of the more general Binomial Distribution that describes how many successes there were across multiple Bernoulli tests conducted independently. While more general than its Bernoulli counterpart, its complexity also makes it less intuitive for use.

The Bernoulli Distribution is an invaluable tool for analyzing a range of phenomena, and understanding it will enable you to make more accurate predictions.

What is a Bernoulli distribution?

These outcomes are commonly known as success, failure, or nothingness.

Flipping a coin is an example of a Bernoulli Test; whereby probability (P) for heads and probability (Q) for tails differ. We may get either heads (P) or tails (Q). When we flip one coin we could either get heads (P), or tails (Q). If we get heads we would receive P whereas for tails it will receive Q as result of our experiment.

The Bernoulli Distribution is an effective and straightforward tool, capable of modeling many phenomena. For instance, it can serve as an accurate predictor of whether a coin would land on its side in multiple flips, or trials conducted successfully.

Binomial Distribution refers to a more general version of Bernoulli distribution which measures success across several independent Bernoulli tests. Binomial is more complex but more comprehensive. Bernoulli Distribution can be used to model various phenomena and gain greater insight into making more accurate predictions. Understanding it helps make accurate forecasting predictions.

Here is an example: “On average, one out of two coin flips results in heads. When using die rolling techniques, sixes occur more frequently. Finally, probabilities determine if an answer to a question is true or false”.

Understanding Bernoulli Distribution can help you make more accurate predictions.

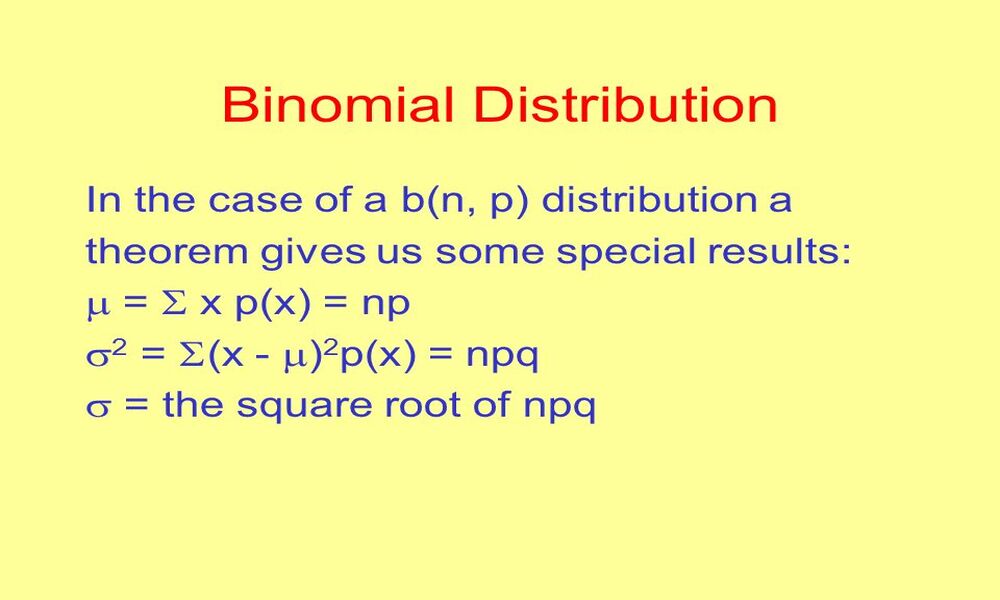

What is a Binomial distribution?

Binomial distributions in probability theory represent discrete events that describe the number of successes over a series of Bernoulli tests with identical success rates p.

P(X = k) = [Binomial coefficient [kn]] This binomial coefficient describes the number of ways there are to select k successful trials from among n trials.

To calculate the probability of three heads, use this formula:

Code Snippet

P(X = 3) = binom103 (0.5)3 (0.5)7 = 120 * (0.5)10 = 0.246 Binomial distributions, commonly referred to as discrete distributions, are frequently employed in statistical work and differ significantly from continuous distributions like normal.

The Binomial Distribution is a versatile mathematical model used to model many phenomena. For instance, it can help predict the probability that certain tests succeed over an extended period or among a group.

Here’s an example: Our probability calculations give the probability that, when flipping coins a certain number of times, a given number will land as heads; or our sales call prediction might predict that a certain number of sales occur from an outbound call list; or that we get correct answers for our tests (all these probability figures have real-world application).

Understanding the Binomial Distribution can assist with making more accurate predictions.

Comparison Table of Bernoulli and Binomial

Here’s a comparison table highlighting the key differences between Bernoulli and Binomial distributions:

| Property | Bernoulli Distribution | Binomial Distribution |

|---|---|---|

| Definition | Describes a single trial experiment with two possible outcomes | Describes multiple independent trials with a fixed number of trials |

| Number of Trials | 1 | More than 1 |

| Possible Outcomes | Success (1) or Failure (0) | Success (1) or Failure (0) |

| Probability of Success | Constant throughout the experiment | Constant throughout all trials |

| Formula | P(X = x) = p^x * (1-p)^(1-x) | P(X = x) = (n choose x) * p^x * (1-p)^(n-x) |

| Notation | X ~ Bernoulli(p) | X ~ Binomial(n, p) |

| Examples | Coin flipping, success/failure experiments with one trial | Counting successes in a fixed number of trials, analyzing survey responses |

| Mean | p | np |

| Variance | p(1-p) | np(1-p) |

| Graphical Representation | Probability mass function (PMF) with two bars | Probability mass function (PMF) with multiple bars |

| Relationship to Other Distributions | A special case of Binomial distribution with n=1 | Generalization of Bernoulli distribution |

Note: In the table, ‘p’ represents the probability of success, ‘n’ represents the number of trials, ‘x’ represents the number of successes, and ‘choose’ represents the binomial coefficient.

Single-trial experiment

A single trial experiment is one that is conducted only once. Researchers who want to know the outcome of a particular event, or do not have time or the desire to conduct multiple trials will use this type of experiment.

A single experiment can answer many questions:

What are the chances of an event occurring?

How does a treatment affect a certain outcome?

What relationship exists between two variables?

Researchers who are interested in the effects of treatment can run a clinical trial. When a researcher wants to find out the relationship between variables they can do a correlational study.

One-trial experiments can be an extremely useful tool for researchers, but they also have some limitations. Participants, their environment, and the researcher’s bias are all factors to consider.

Understanding the limitations of single-trial studies can help researchers make better decisions.

Examples of single-trial experiments:

A researcher conducts a poll to determine what the public’s opinion is about a taxation policy.

A physician performs an experiment to determine the effectiveness of a drug.

A sociologist performs a correlational study to determine the relationship between education and income.

Understanding the limitations of single-trial studies can help researchers make better decisions.

Probability of success and failure

The probability of Success or Failure defines the likelihood that an event will take place and is represented by two numbers; they are denoted q and p respectively. When combined together they equal 1 which always remains true.

Flip a penny, for instance, and its probability of landing on its heads (p) and tails (Q) is determined. When both are equal (q = 0, then 50% of the time will result in either heads or tails landing on its surface.

Probabilities are an invaluable way to accurately anticipate outcomes of events. Knowing, for instance, that the probability of getting heads when flipping a coin is 50/50 can help us predict that we’ll likely come up with heads at least half the time when flipping coins.

Calculating the expected value of an event requires using its probability. An event’s expected value can be determined using its average of all possible outcomes; in this example, if we flip a $1 coin and bet heads, its expected value would be $0.5. This is because 50% chances exist of winning $1 and 50% chances exist of losing.

The probability of success or failure is an integral concept in statistics and probability theory, used for making predictions regarding events’ outcomes and calculating expected values. Coin-flipping experiments can help researchers assess the likelihood that a coin will land either heads or tails. To do this, they flip it several times and record its results; using these as a basis to predict whether heads or tails will occur. These materials will be essential in conducting a coin-flipping experiment: Instructions for Flipping Coins The coin-flipping test is an effective, straightforward way to assess the odds of receiving heads or tails when flipping a penny. Anyone can conduct this experiment; no special equipment is necessary. Experiments allow us to evaluate the probability of random events. When flipping 100 coins, for instance, 50 will probably result in heads. Actual outcomes may differ from expected values due to randomness; no matter what we try or expect. The coin-flipping experiment can provide an insightful way to examine how luck influences our lives, providing more knowledge about chance events by conducting this test. These are usually called “success” and “failure”. They are usually called “success” and “failure “. A special instance of Binomial Distribution that describes the number of successes in a series of independent Bernoulli test. Although more complex, the binomial distribution is also more general. The Bernoulli Distribution relates to the geometrical pattern that describes the number of Bernoulli test required to obtain a single successful result. The Bernoulli Distribution can be used to model many phenomena. Knowing the Bernoulli Distribution helps you make better predictions. Bernoulli Distribution is a distribution that can be used in many different ways. By understanding the Bernoulli Distribution, you can make better predictions. A collection of independent experiments One experiment’s outcome does not affect the results of another Multiple independent trials are often used to estimate the probability of an event. For example, if we flip a penny 10 times and get heads 6 times, then the estimated probability is 6/10 = 0. According to the law of large numbers, the average of many trials is closer to the true probability of an occurrence. By conducting multiple independent tests, we can make better decisions. Here is an example: Multiple independent tests will help us make better decisions. Statistics can be used to answer many questions such as: What are the chances of three heads from five coin tosses? Binomial probabilities can be computed using various software programs and statistical calculators. Manually counting success after conducting multiple tests can be accomplished using the binomial formula; its formula can be found here: P(X = k) can be calculated by using the binomial formula which estimates the probability of reaching a desired number of successes out of a given number of trials, where n is the total number of trials and k represents the success rate; and (1)-p is failure probability. Using this equation is simple. Throughout your trials, you’re looking at success or failure with each attempt taken and each one counts towards your total odds (x/k = 1) Calculate the probability that a certain number of successes in a set number of trials. For instance, two trials resulting in success is estimated as having a probability of 0.3125, and 10 trials with a probability of failure of 0.5 would produce 5 successes. Statistics is used to measure how many trials have been successful. There is more than one way of accomplishing this, depending on your specific circumstances; you will have to find an optimal way to count success. A probability mass function (PMF) is the graphic representation of a Bernoulli Distribution. A PMF graph shows the probability of each outcome. The Bernoulli Distribution can have two outcomes: success or failure. The probability of success is shown by p and the probability for failure by q. The sum of p and q is always 1. The PMF of a Bernoulli Distribution shows the probability that each possible outcome will occur. Here is the PMF: The graph shows a step function with a jump of 0.5 to 0. The height of a jump of 0.5 represents the probability of success. The probability of failure is 0.5 times the height of a 0.5-inch jump. The PMF of a Bernoulli Distribution can be used to calculate any result. Calculate the probability of two trials being successful in three attempts by adding two success probabilities to one failure. In this example the probability of getting two successes is (0.502)2 = 0,25, and the probability of getting one failure is (0.501)1= 0.5. The addition of these probabilities gives 0.75. The probability of two trials being successful in three is 0.75. A PMF is a useful tool for understanding the probability of an event. By knowing the PMF, you can make better predictions. These are usually called “success”, “failure” or “nothing”. These are usually called “success”, or “failure”, or “nothing “. A special instance of Binomial Distribution that describes the number of successes in a series of independent Bernoulli test. Although more complex than the Bernoulli distribution, the binomial distribution is more general. The Bernoulli Distribution relates to the geometrical pattern that describes the number of Bernoulli test required to obtain a single successful result. The Bernoulli Distribution can help you to make better predictions.

A coin, paper, and pen or pencil will be needed in this exercise. Flip the coin a given number of times until your instructions say otherwise.

On a piece of paper, record the results of every coin flip you do. Divide this total number by the total number of heads or tails that have occurred – for instance, if it comes up heads six times out of 10 then your probability for hitting heads would be 6/10 = 0.66Relationship to other distributions

Multiple independent trials

Counting successes in a fixed number of trials

In a Bernoulli Test with Probability p, what is the likelihood that 2 successes occur out of 10 trials?

Overall, how many successes are expected out of a Bernoulli Test with Probability p?

Binomial Probabilities can be used to predict how many trials it would take before success occurs, given a given number of trials.Graphical representation

Conclusion